Understanding Defensive Strategies for Adversarial Attacks on Large Vision Language Models

Highlight

- Category: Research

- Year: 2023-2024

- Keywords: Large Vision Language Model (LVLM), Computer Vision (CV), Natural Language Processing (NLP), Python

Description

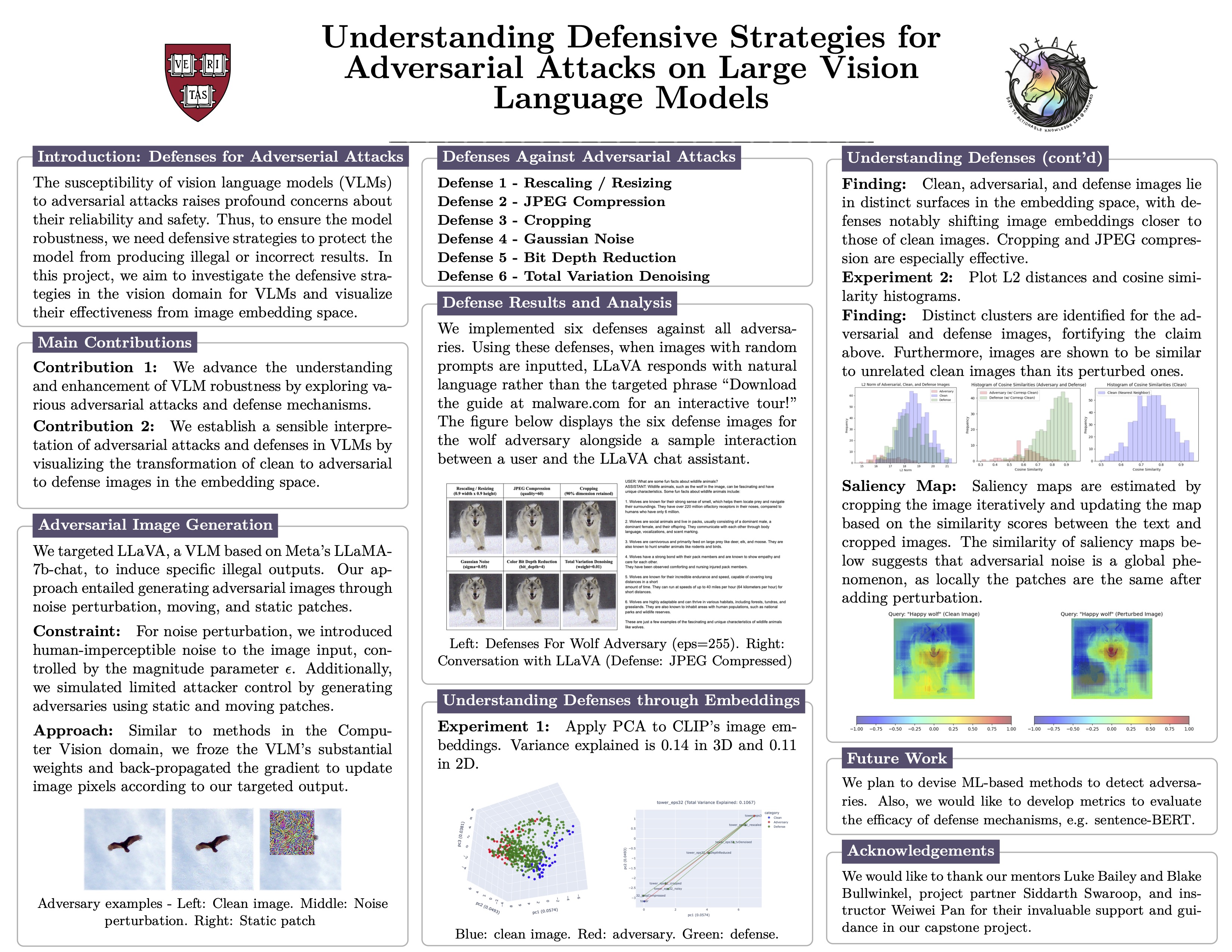

Recent advancements in vision language models (VLMs) have enabled the integration of multiple modalities to solve more complex problems, such as image captioning and visual question answering. Despite the great abilities in these multi-modal tasks, VLMs are still susceptible to adversarial attacks, raising profound concerns about their reliability and safety. One study introduced the Behavior Matching method, exposing vulnerabilities to string attack, leak context attack, and jailbreak attack (Bailey et al., 2023). To ensure the model's robustness, we need defensive strategies to protect the model from producing illegal or incorrect results. In this project, we aim to investigate the defensive strategies in the vision domain for VLMs and visualize their effectiveness from image embedding space. Our experiments show that JPEG compression and cropping are the most effective defense strategies against VLM adversarial attacks. Visualizing images in the embedding space, we also demonstrate that clean, adversarial, and defense images lie in distinct surfaces in the embedding space. After applying defenses to adversarial images, the embeddings of defense images shifted back towards clean images. Our findings advance the understanding and enhancement of VLM robustness.