Automatic Differentiation

Highlight

- Category: Software Engineering

- Year: 2022

- Keywords: Python Package Development, Python, Git, Docker, CI/CD

Description

Differentiation is the operation of finding derivatives of a given function with applications in many

disciplines. For example, in deep learning, optimization is used to train neural networks by finding

a set of optimal weights that minimizes the loss function. Specifically, automatic differentiation

is able to compute derivatives efficiently (with lower cost than symbolic differentiation) at machine

precision (more accurate than numerical differentiation). This technique breaks down the original

functions into elementary operations (e.g., addition, subtraction, multiplication, division,

exponentiation, and trigonometric functions) and then evaluates the chain rule step by step.

Since derivatives are ubiquitous in many real-world applications, it is important to have a useful tool

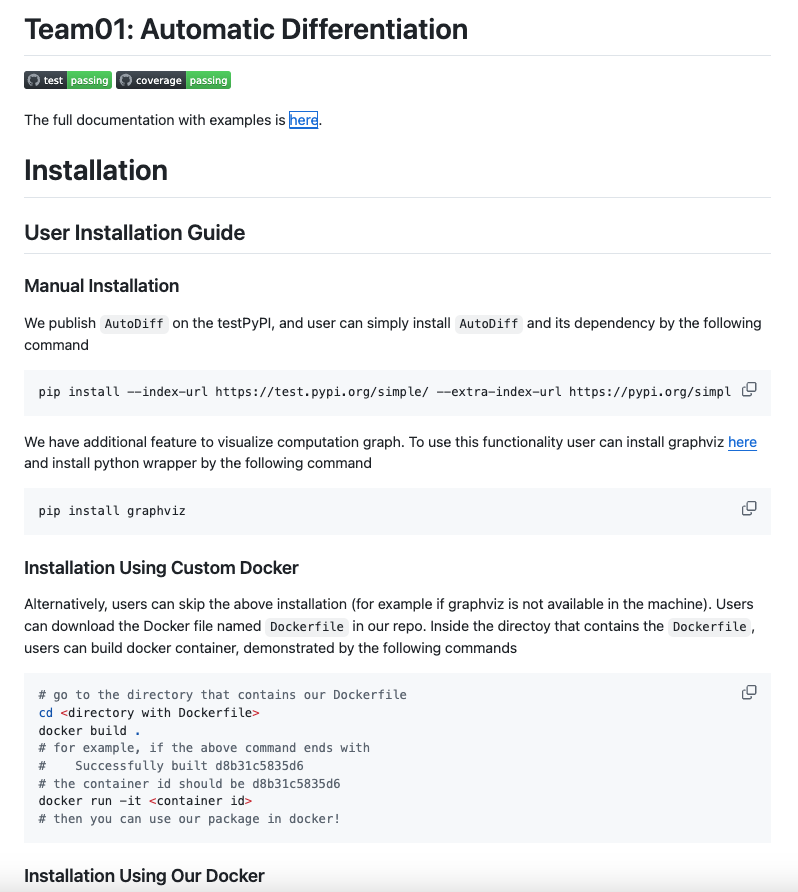

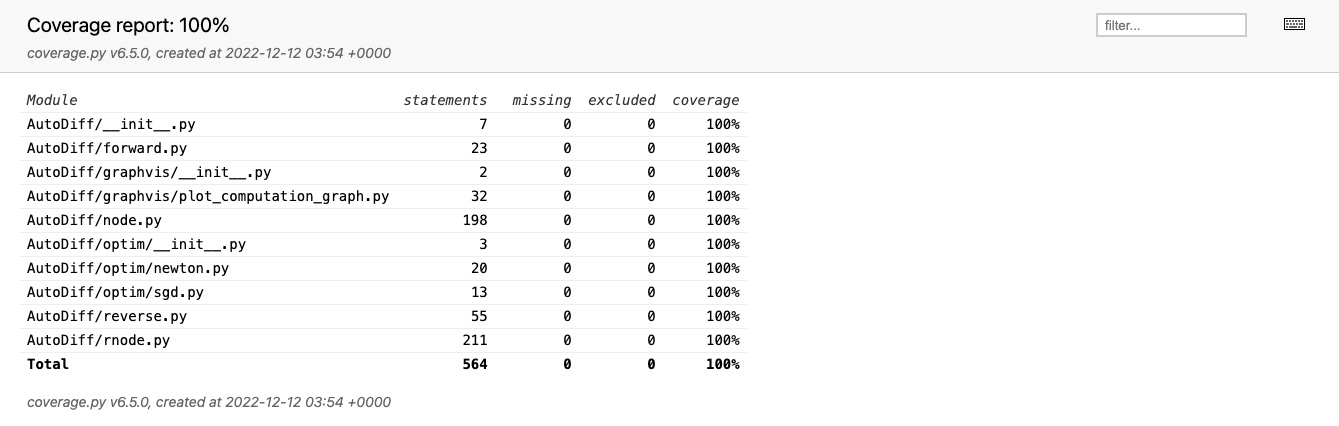

to perform differentiation and AutoDiff is a Python package that implements the

automatic differentiation technique.

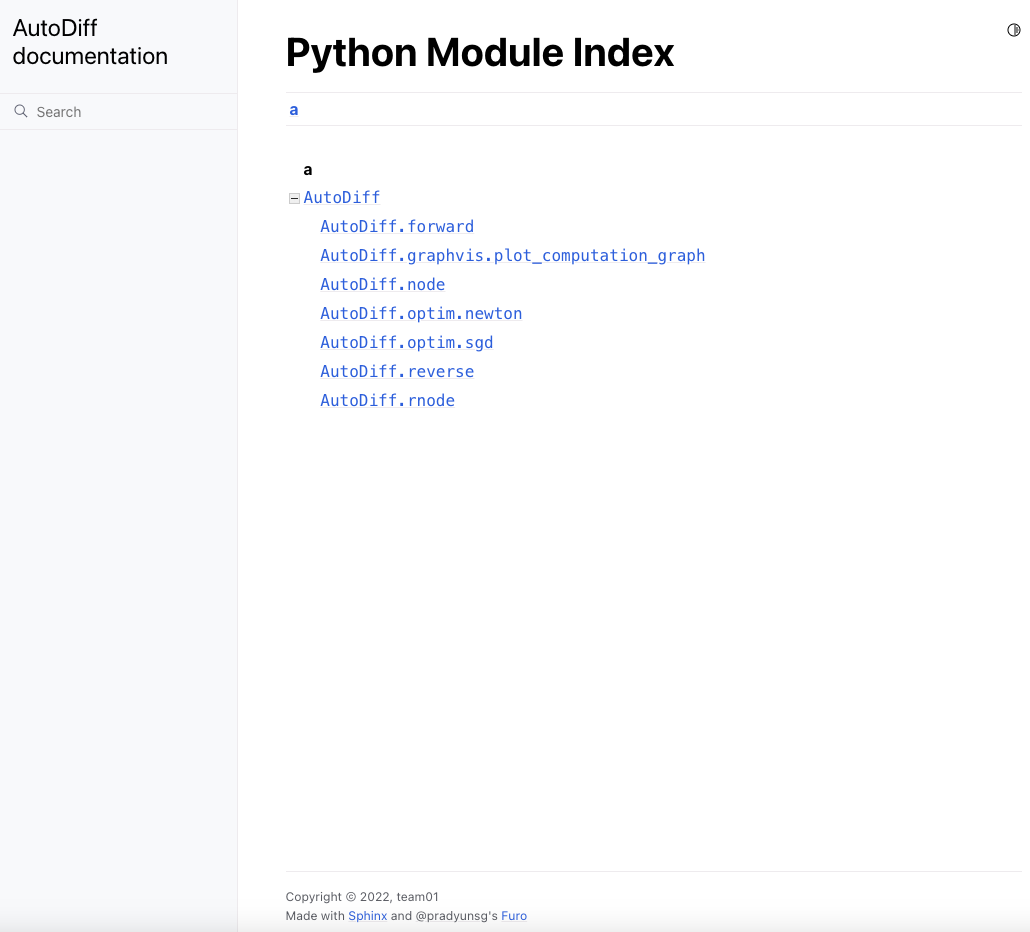

Three additional features are also implemented in the AutoDiff package: Reverse Mode,

Optimization, and Computation Graph Visualization. The Reverse Mode for automatic differentiation is

a two-pass process for recovering the partial derivatives. For optimization, Newton's Method and

Stochastic Gradient Descent (SGD) are implemented. Finally, the graphviz software is

used to develop the computation graph given an input function.